The Cyberspace Administration of China (CAC) and three other authorities jointly unveiled the artificial intelligence generated content (AIGC) labelling measures (Labelling Measures) in March this year. Accompanying the Labelling Measures, China’s national standardisation committee (TC260) released a set of mandatory national standards on AIGC labelling method (Labelling Standards).

Both the Labelling Measures and the Labelling Standards took effect this week, from 1 September. Market players in China such as Tencent, Douyin, RedNote, Weibo and DeepSeek have already proactively made public statements on the respective measures they are taking, and their users should take, to comply with the Labelling Measures.

The Labelling Measures are the first special-purpose legislation under China’s three-pillared AI legal framework, i.e., the algorithm regulation, the deep synthesis provisions and the interim GenAI measures. They establish a dual-track labelling system to set obligations for responsible parties across the entire AIGC value chain, from generation to distribution to users.

“Explicit-Implicit” dual-track labelling system

The Labelling Measures introduced two types of labels:

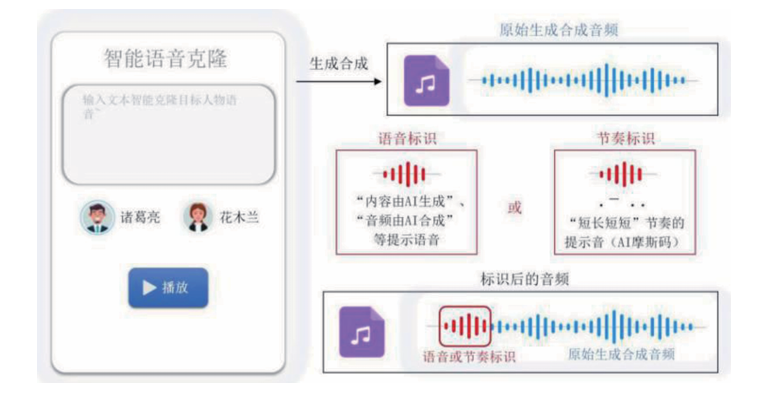

- Explicit labels are added to AIGC or within the interface of interactive scenarios and can be clearly perceived by the users. The Labelling Measures and the supporting Labelling Standards provide clear operational guidelines on how to label AIGC content in text, images, audio, videos, and virtual forms.

For example, images should be marked with wordings such as “AI-generated” at appropriate positions, and audio should incorporate specific rhythmic cues.

(Examples of explicit labelling in images or audio scenarios from the Labelling Standards)

- Implicit labels are not easily or clearly perceivable by users through technical measures. As a fundamental mandatory obligation, implicit labels must be embedded in the metadata of files containing AIGC. Such labels must include, e.g., information on the attributes of the content, the name or code of the service provider, and the content number. Services providers are encouraged to include digital watermarks within the AIGC itself.

Full lifecycle management

The Labelling Measures clarify the obligations of four players in the end-to-end lifecycle of AIGC:

| Regulated subject | Obligations |

|---|---|

| Internet information service providers |

|

| Internet information content dissemination service providers |

|

| App distribution platforms |

|

| Users |

|

Looking ahead

Last week China's State Council issued the “AI Plus” action plan, aiming to promote the use of AI applications across the economy, society and government. With the rapid rise of AI technology and products such as DeepSeek as a leading example, China’s AI industry has been gradually maturing, and GenAI will no doubt continue to remain a key focus of AI legislation and regulation in China.

Following CAC’s statement in February on its 2025 “Qinglang” (“clear and bright”) series of special actions which sought to address the abuse of AI technology as one of its key tasks, CAC initiated a three-month nationwide special campaign from April to regulate AI services. The campaign targeted issues including non-compliant AI products, improper training data management, weak security measures, AI-generated rumours, fake information, and violations of minors' rights.

TC260 has recently issued a number of generative AI security standards including service security requirements (GB/T 45654-2025), data annotation security specifications (GB/T 45674-2025), and pre-training and fine-tuning training data security guidelines (GB/T 45652-2025). These standards will take effect from 1 November 2025.

In addition, while different jurisdictions share some consensus on the regulatory approaches to AIGC labelling, there are still no unified rules or standards. Without these, the uncertain geopolitical climate in which multinational tech and other enterprises currently operate, may further raise the technical development and compliance costs for businesses to engaged in cross-border activities.

By staying ahead of regulatory developments and enforcement trends, and preparing for compliance with upcoming requirements, organisations can better manage risk, foster trust, and seize emerging opportunities in the Chinese and global AI markets. Our tech-focused team are here to assist in that!

/Passle/5c4b4157989b6f1634166cf2/MediaLibrary/Images/2026-01-28-11-42-24-951-6979f620da2c44bd51323e05.jpg)

/Passle/5c4b4157989b6f1634166cf2/SearchServiceImages/2026-02-26-09-59-52-439-69a019985e365c8f59bcd200.jpg)

/Passle/5c4b4157989b6f1634166cf2/SearchServiceImages/2026-02-26-11-29-56-314-69a02eb452230663c9b9705f.jpg)